In November 2022, OpenAI release ChatGPT on the world, and the response was nothing short of explosive.

Within five days, it snagged over 1 million users.

Three months later, that number ballooned to 100 million monthly active users.

Today, more than 300 million people tap into ChatGPT every week.

While the internet is buzzing with debates and talk about AI sparking an apocalypse or stealing jobs, I’m more curious about the sneaky hidden risks slipping under our radar.

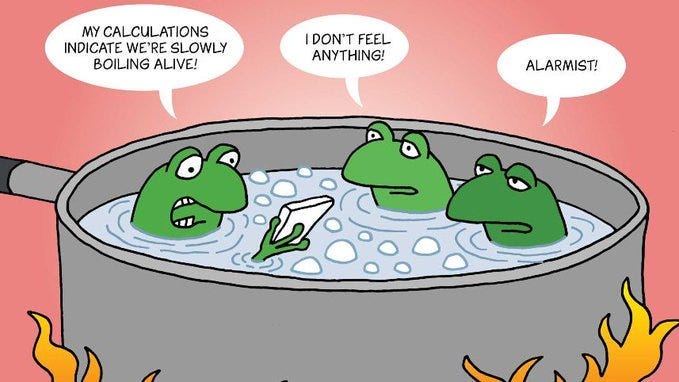

You know that picture that old frog-in-boiling-water tale, right?

Drop it in hot water, and it leaps out; heat it up slowly, and it’s soup before it knows what’s hit it. And right now, we could be lounging in warm water, oblivious to the temperature creeping up.

Don’t get me wrong—I’m a huge AI fan — in fact, I guess you can all me an AI boomer. I use it daily, and I’m betting on its long-term upside. It’s changing the way we work and live our lives.

But I wanted to peel back the curtain and spotlight four hidden risks that perhaps might be hidden in plain sight:

privacy

algorithmic bias

intellectual laziness

concentration of power

They’re all happening now underneath our noses, and they’re worth a hard look.

Privacy

Ever wonder what happens to the stuff you tell an AI?

You might think it’s a one-and-done chat that vanishes when you close the tab.

Actually, no.

AI models like ChatGPT have a “context window”—it’s a fancy way of saying they hang onto what you say. Every question, every rant, every quirky detail about your life gets tucked away, building a profile of you. Your interests, your writing quirks, maybe even your deepest fears—it’s all fair game.

This isn’t evil by default.

The fact is that the more an AI knows you, the better its answers get.

But here’s the kicker: it’s getting all this intel without your consent.

Unlike Instagram tracking your likes or TikTok clocking your scroll time, AI gets the raw, unfiltered you—straight from your keyboard. That’s a goldmine for hyper-targeted ads or worse, and most of us don’t even blink.

Data harvesting isn’t new. But the scale of what AI can glean—and how intimately it knows us—should make us all pay attention.

Algorithmic Bias: Echo Chamber?

We should all know that AI isn’t some pure, unbiased oracle.

It’s trained on the internet—a messy mix of human opinions, hot takes, and corporate (and political) agendas. This means it can develop biases, and sometimes, it gets a nudge from the companies behind it.

Take planning a trip for example. Don’t be shocked if at some point you’re nudged toward hotels or eateries, just like sponsored Google ads. But it’s a heads-up to watch what you’re fed.

Then there’s what I call “cyclical bias,” and it’s kind of weird.

Let me explain.

Imagine this: you ask an AI for help, it spits out an answer, and you slap that answer online—a blog, a tweet, some code.

Later, the AI trains on that same content, looping its own output back into its brain. It’s like playing telephone with yourself, except the message keeps twisting.

In coding, devs use AI-generated snippets, bugs and all, and push them into projects. So in the next training cycle, the AI swallows its own mistakes, amplifying them across the web.

This isn’t a glitch—it’s a phenomenon that’s happening right now.

If we all lean on AI too much, recycling its answers, the internet turns into a giant echo chamber.

You don’t really get diversity of thought anymore and original ideas might get buried.

What happens is that we’re left with a digital monoculture, and we won’t spot it until the cracks show.

Laziness

AI is a HUGE time-saver, there’s not question about it.

Need a quick answer? Done. A code snippet to help build your app? Yours in seconds.

But here’s the trap: it’s too easy.

More and more, we grab the first thing AI tosses us and run with it, no second-guessing. Is it right? Is it complete? Who cares—it’s fast.

This isn’t about laziness in the “couch potato” sense—it’s about outsourcing our critical thinking.

Why wrestle with a problem when an AI can spoon-feed you a solution?

In schools, kids could churn out essays without grasping the material. In startups, founders might greenlight plans based on shiny AI insights that don’t hold water.

What happens when we stop flexing our mental muscles? They atrophy.

Relying on AI for surface-level fixes risks turning us into shallow thinkers, relying on borrowed smarts.

Convenience is great—until it’s not.

Power

Right now, the AI heavyweights—think ChatGPT, Gemini, Llama—are in the hands of a few tech titans.

These companies call the shots on what data trains the models, what answers you get, and who’s allowed in the sandbox.

This no longer becomes a market; it’s an oligarchy. Closed-source models like OpenAI’s make it worse—black boxes where we can’t take a look inside.

Imagine a world where a handful of players gatekeep the flow of knowledge.

It’s not hard to see how that could slide into manipulation or control. But there’s a counterpunch: open-source AI.

Take DeepSeek—it’s free, downloadable, and runs on your own computer (if you wanted). No middleman, no toll booth. You’re not paying anything and you’re owning the tool outright. That’s a game-changer, shifting power from the few to the many.

The old path—paying for access to someone else’s AI—it becomes a leash.

Open-source cuts it.

What Next?

AI isn’t the bad guy—it’s a tool, and a really good one.

But tools can cut both ways. The more we lean on it, the more we need to keep our eyes open.

Here’s just a few ways to be better aware of what’s being fed to you.

Understand How AI works (just a little is enough): You don’t need to be an expert, but a working knowledge of AI basics can protect you from blindly trusting flawed outputs. AI systems rely on algorithms, data, and training processes—meaning their results can reflect biases or gaps in that data. For example, if an AI tool recommends a marketing strategy, knowing it’s trained on past trends helps you see why it might miss emerging shifts.

Compare Multiple Models: Not all AI tools are created equal. Each model has its own strengths, weaknesses, and quirks based on how it was built and trained. Practically, this means using more than one AI for important tasks. Say you’re drafting a report: run your prompts through ChatGPT, then Gemini, then Claude. Compare the tone, depth, and accuracy of each response. You’ll notice differences—maybe one’s more creative, another’s more factual. This can reveal blind spots and helps you pick the best tool for the job—or even blend their strengths.

Question What You’re Told: AI can sound authoritative, but it’s not always right. Build a reflex to double-check its claims. Ask yourself: Does this make sense? Is there evidence? What’s missing? Treat AI like a smart intern: helpful, but in need of supervision. This critical lens keeps you from being led astray by polished nonsense.

Connect with Me

Are you new to the newsletter? Subscribe Here

Learn more about me on my website

Check out my YouTube channel (and subscribe)

If you’re a founder, apply here (Metagrove Ventures) for startup funding

Thanks for reading.

If you like the content, feel free to share, comment, like and subscribe.

Cheers,

Barry.